I frequently find myself providing reality checks for folks who have bought into some of the myths that are floating around about wine. There are a lot, so I’ve just picked some of my favourites. A few of these are frequently cited, so that there is already a good amount of explanation and debunking available on line. However, I have included them here to provide a more complete set and to provide my personal take on these beliefs. In the end I came up with enough myths, 18 in all, that I have had to divide them into two main sections in order to keep my postings to a reasonable length – the second half of them will be the subject of my next post.

The first eight myths that I discuss all have to do with buying wine – the first four are of interest to wine buyers in general, while the remaining four are specifically geared to Ontario residents who are stuck with the LCBO.

Buying Wine

1. High Scores / High Prices / Varietals Indicate the Best Wines

There are many clues that the average consumer uses to help make a wine decision, such as critics’ scores, pricing, the label, varietal vs. regional, and recommendations from salespeople. The problem is that most of these indicators contain a kernel of validity surrounded by a thick husk of irrelevancy for any particular individual purchaser.

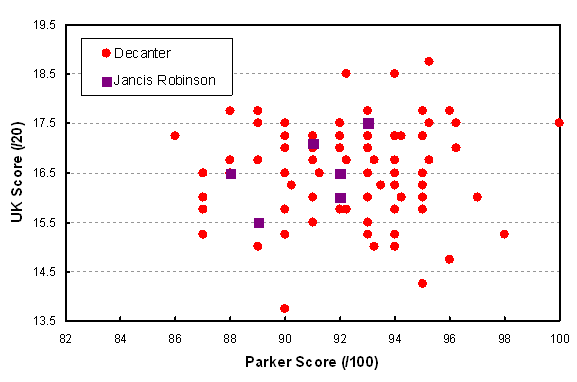

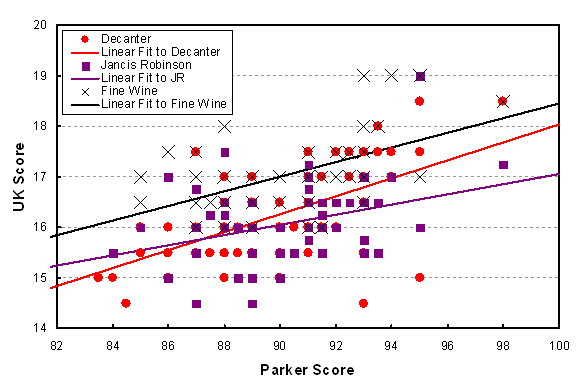

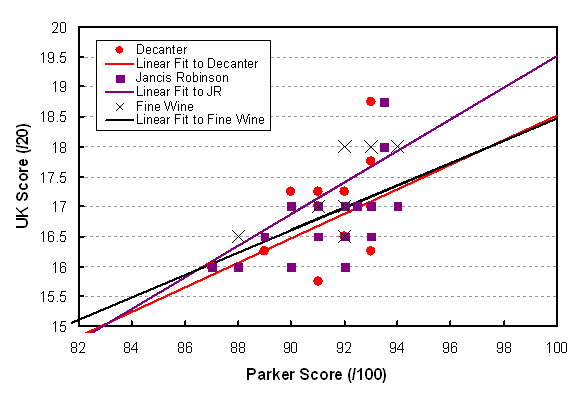

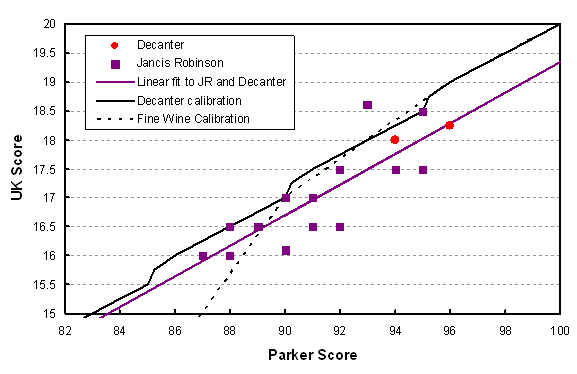

First: scores and critics’ recommendations. Take a look at my post on scores and you will see that the correlations both between scores and quality, and between different critics’ scores, are weak at best. The preferred approach should be to try recommended wines from a variety of critics until you find one (or more) whose tastes are a reasonable match for your own. Then use that critic as a guide. Now, coming up with that solution takes quite a bit of work, the direct opposite of the intent of easy-to-understand scores. But then, most of the work is drinking wine after all, so buck up!

There is, of course, some correlation between price and quality, but remember price is really determined by what people will pay. Therefore a high quality wine will generate consumer demand and the producer can charge a higher price. Every other consideration is subordinate to that point. For example, you often hear that low yields, organic cultivation, hand crafted wine, good oak, etc., etc. cost money and the wine will cost more. However, that only works if the wine is better than a more industrially produced version, and only then will consumers pay more. Otherwise the winery will not be able to continue along that path. Famous names and critical hype, will generate demand and higher prices. That’s why the best quality/price ratios are in the lesser known regions – southern Italy, southern France, northwestern Spain, Greece, Portugal, and Austria, for example.

Varietal labelling is favoured by many consumers because it is easy to remember and comfortably reliable. However, most of the great wine regions of Europe do not use varietal labelling, so it is in no way an indicator of quality – there is no correlation.

2. All Wines Should (or Should Not!) be Under Screw Cap

Here I am wading into a highly controversial subject. Producers from Down Under (Australia and New Zealand) insist that all wines should be under screw cap. In Europe only a very small percentage are sold this way. Who’s right? Well, here are my thoughts.

First, any wine intended to be drunk within a year of purchase should be under screw cap – this dictum actually covers the bulk of wine. Second, any white wine meant to be consumed within a few years should also be under screw cap. Then we are left with red wines for medium to long aging and the few white wines able to be aged for a decade or more (i.e. most quality Rieslings, white Burgundy, vintage Champagne, and quality sweet wines). Here the preference should depend upon the tastes of the consumer. Wine does age differently under different closures. Many people are familiar with and enjoy the flavour of mild oxidation and related transformations that provide complexity in older wines. Others like the retention of fresher fruit flavours, but at the risk of chemical reduction, which engenders less familiar (often sulphur-based) flavours that may or may not be appreciated. Therefore the decision of the producer will depend on some combination of tradition and the preferences of the consumer base for that particular wine.

Second, the risk of cork taint/corked wine/TCA has been greatly reduced over the past couple of decades. Where I used to find a corked bottle every week or two, now it’s only once or twice per year. And remember that TCA doesn’t just arise from cork treatment – it can arise from the winery environment and is therefore even found from time to time in screw capped bottles.

Lastly, let’s ditch the fatuous statement that using a cork is some prehistoric practice of “sticking a piece of tree bark in a bottle.” One could as easily mock the practice of “sticking a piece of industrially modified petroleum on the end of a bottle.” (i.e. plastic, in particular the plastic insert that makes the seal in a screw cap.) In fact, cork is one of the most amazing materials known, a material whose properties cannot be duplicated in any laboratory. One might as easily mock the use of a hunk of tree to make my computer desk or the frame of my house. Let’s have a rational discussion, please. And last but not least, cork is a green renewable resource, contrary to metal and petroleum products. There is room for both closures in the business – neither is an indicator of quality, low or high.

3. Avoid Wine from a Region where there has been a Scandal

I know someone who won’t buy Chateau Pontet-Canet, one of the greatest ones of Bordeaux, because over forty years ago the owner of Pontet-Canet at the time (Cruse) was caught strengthening their cheaper wine with stiff southern swill. There are other folks who won’t buy Austrian wine because of the antifreeze scandal of thirty years ago. Then, what about the Brunello scandal from the past decade? Should you buy Brunello di Montalcino?

There are two points to keep in mind here. First, wine has been adulterated (to stretch the limited volume of famous quality wine available) since the beginning of time. However, there is apparently much less of that sort of fraud now than in the past because of regulations that are at least partially successful, and because global warming means that marginal wine regions have less need of assistance. In fact, there is more concern nowadays about fraud with respect to older and collectible bottles than there is about good everyday wine.

Second, your best bet for fraud-free wine is often the specific region or producer that originally had the problem. In the process of cleaning up their act, implementing damage control, and attempting to regain market share, the offenders need to be squeaky clean and above reproach. There’s the good news for the consumer. So buy and enjoy your Pontet-Canet, Brunello di Montalcino, and Austrian Riesling with abandon!

4. A Sommelier will Always Try to Sell You an Expensive Bottle

A good sommelier is like any good retailer: she/he does want to make a sale and generate cash flow, but real long term business viability comes from good customer service, a satisfied client, and repeat business. Therefore a sommelier needs to provide a wine that you will enjoy with your meal and for its own sake, and that falls within your budget. You will likely have more than one possible wine offered to you, all appropriate matches to the food, but at different price points. If the suggested wines are too expensive for your budget, just point to a cheaper part of that section of the wine list and suggest that you are looking for “something more along these lines.” The sommelier will understand and at the same time you will actually sound knowledgeable to your guests! But don’t forget that the wine is a significant contributor to the enjoyment of the meal. Divide that cost by the two or three or four people drinking it and compare to the cost of one person’s meal. You may then feel more comfortable about a slightly more expensive, and perhaps more appropriate, bottle.

The LCBO

You readers who don’t live in or near Ontario and are unfamiliar with the government liquor monopoly, the Liquor Control Board of Ontario (LCBO), are excused at this point and can sit back and await my next post. For the rest of us, here are four myths about the LCBO.

For a good backgrounder exploding publicly perceived myths about privatization, take a look at this article. I won’t repeat all the information contained within it.

5. The Status Quo Returns the Most Money to the Province

This myth was exploded by a report from the past decade, authored by a blue ribbon panel that was tasked by the LCBO to determine its future business strategy. I won’t go into detail since I devoted an entire previous post to this report, but the bottom line is that they unanimously recommended full privatization as the means to make the most money for the government and to provide the greatest customer satisfaction. Needless to say, the report was deep-sixed by the LCBO and the government as quickly and as thoroughly as possible.

Remember, the government of Ontario receives almost all of its advice about alcohol sales from the LCBO, so everything they hear is designed to support the LCBO. They then parrot the LCBO’s words to the press and the public. How often do we hear that the LCBO provides billions of dollars to the government coffers? – we couldn’t give that up! The facts are that by eliminating the enormous costs of running the LCBO (stores built like palaces, inventory costs, high priced help, etc.) and raking in the income from licensing retail sales establishments (along with all the other taxes and duties that would not go away), the government would make more money from privatization than from the status quo.

Recently a new report on the subject was published by the C.D. Howe Institute. Once again, the strong recommendation is to enable private alcohol sales in order to boost revenues and to provide better customer service. This report is also an interesting and convincing and unsettling insight into all aspects of alcohol sales in the province.

So why is the government so reluctant? It’s not the money to be made; it’s not the public perception (a majority of Ontarians are in favour of privatization, according to a 2013 Angus Reid poll); it’s not the social implications (see Myth #6). The only thing I can think of is that they are scared to death of the unions and the impact of eliminating so many highly paid union jobs. That opinion is supported by the reality that the NDP is the only political party not to have favoured privatization at one time or another (the other parties have backed privatization, but only when in opposition, never as the government). Then, maybe the only roadblock is that governments hate change (and the accompanying uncertainty). Really, all that it will take to provide a big step forward for the Ontario consumer is a little political courage. Oh well, perhaps in the 22nd century.

6. Private Liquor Stores Are Not Socially Responsible (Contrary to the LCBO)

There are several counters to this particularly insidious myth – I describe it thus because it is circulated by the LCBO itself. First, private stores operate in jurisdictions all over the world, including other Canadian provinces (and Ontario, see below), without permitting a tsunami of teenagers to buy booze. I could point to the study that I brought up in a previous post, where the LCBO rated last in responsibility after the Beer Store and corner stores (selling cigarettes in this case). However, common sense alone should make it clear to any thinking person that private stores have a great deal more to lose than publicly owned stores if they abuse their privilege and sell to minors or intoxicated customers. A private store could lose its lucrative AGCO licence to sell alcohol. The LCBO would only get a rap on the knuckles. And think carefully about those boasts from the LCBO about how many minors were turned away each year. What counts is how many got through! They never publish those numbers.

The biggest counter-argument, however, is the fact that there are already hundreds of successful, socially responsible, private alcohol retailers in the province! And I’m not talking about the privately (and foreign) owned Beer Store. I am referring to the over 210 operating LCBO Agency Stores. These are small retailers (general stores and their ilk) in more remote, and not so remote, locations in the province, where it would be uneconomic for the LCBO to build a full scale palace of a store to serve a relatively small customer base. These stores profitably and responsibly sell LCBO products themselves, using their own sales people. No wonder the LCBO doesn’t want to bring these stores into the discussion!

7. The LCBO is Customer Oriented

I have patronized many a wine shop around the world and I’ve been to many other retailers, so I have a reasonable idea of what good customer service should be like:

- Provide what the customer wants – In the LCBO’s world, the definition of what the customer wants is what sells the most, sort of like Walmart. Then you throw in some labels in Vintages that have been awarded high scores by critics (see Myth #1). But where are the really interesting and unusual wines, the small producers, the Greek wines, the Swiss wines, the trocken wines from Germany, the orange wines, etc. that we read about and salivate over? Sure, one or two of them will show up from time to time, and from the LCBO’s viewpoint that means that the subject is covered. However, even then the only remaining bottle may be in a store elsewhere in the province. Then if you want to ratchet up your frustration a little, try to get an inter-store transfer set up. Success is up to the whim of the local store multiplied by the whim of the remote store, resulting in a depressingly low probability of success.

- Put good products on sale – To the LCBO, the word “sale” means “let’s get rid of the stuff that never sells.” They don’t have to put anything else on sale because there is nowhere else to buy it (see Myth #8). And what about the case discounts of 10-20% that almost every other wine merchant in the world provides?

- Work with the local community – One of the most infuriating practices of the LCBO involves staffing. Every three years or so they feel obligated to ship your local Product Consultant off to some other cookie cutter store, just when you had established a good customer/retailer relationship – then you have to start all over again. But at least they promote Ontario wines, right? Well, many local wines are produced in small quantities, but the LCBO business model requires wines to be available through a large number of their stores – that cuts out many of the small producers (the problem exists equally for BC and small foreign producers). On the other hand, they are happy to provide lots of prominent shelf space for “Cellared in Canada” (but mostly imported) swill produced by the large corporations.

8. The LCBO is Not a Monopoly

This myth is another favourite of the LCBO itself. They repeat it endlessly with the justification that there are other places to buy alcohol in the province, after all. But what are those other retailers? First there is The Beer Store (TBS), a private monopoly run by three large foreign breweries for their own entertainment and profit. There is nowhere else in the world where a government has granted beer producers a monopoly on retail sales. And these are monopolies because the LCBO and TBS generally try to sell non-overlapping brands. The Beer Store sells mostly the brands bottled by its owners while the LCBO handles the rest, meaning that small craft brewers are effectively shut out of The Beer Store, where consumers naturally go first to buy beer. In addition, a recently revealed agreement between the two organizations limits the sale of larger cases of beer to the TBS only.

The only other vendors of wine in the province are Ontario wine producers and import agencies. But you generally have to go to the winery to buy small production volume, quality Ontario wine, and most of us don’t live in those areas. Forget about the private off-winery stores – almost all of them (Wine Rack and Wine Shop) are operated by the two giants of the Canadian wine industry – Constellation Brands and Andrew Peller. Of course, they only sell their own brands – hardly a threat to the LCBO monopoly. OK, what about foreign wines? There are only two ways you can buy foreign wine outside of the LCBO: through agencies or by belonging to a wine club. In general, you have to buy by the case from these vendors and wait days or weeks for delivery, so they aren’t a viable alternative for the average wine buyer either. Of course there is also no meaningful competition in sales of spirits, but that’s not my main concern here.

The LCBO is not a monopoly? Sure, pull the other one too.